Weź udział w losowaniu i wygraj zwrot kosztów zamówienia

Losowanie za

Weź udział w losowaniu i wygraj zwrot kosztów zamówienia

(Link do wystawienia opinii otrzymasz mailowo do 7 dni od daty dostawy produktu)

Lustra LED w sklepie Artforma

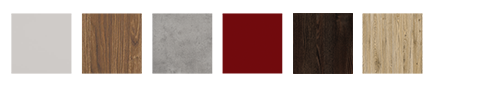

Wybierz uznanego europejskiego producenta luster łazienkowych obecnego na rynku od ponad 20 lat. Każde lustro to osobna historia – dzięki rozwiniętemu systemowi konfiguracji, umożliwiamy naszym klientom dostosowanie lustra pod swoje upodobania. Decyduj o każdym elemencie Twojego zamówienia i odmień swoje wnętrze lustrem podświetlanym na miarę XXI wieku. Własny rozmiar lustra, barwa podświetlenia, interaktywne lustro smart z Twoimi ulubionymi aplikacjami? To możliwe!

Lustro zainstalowane? Dodaj zdjęcie z hasztagiem #Artforma_com, aby znaleźć się w wyjątkowym gronie tysięcy zadowoloych klientów!

Oferta sklepu od producenta luster Artforma

Lustra łazienkowe

Lustra łazienkowe to jeden z najważniejszych elementów wyposażenia każdej łazienki. Aby były jak najbardziej funkcjonalne, najlepiej postawić na te podświetlane! Oświetlane lustra LED to pomoc w wykonywaniu codziennych czynności pielęgnacyjnych. Sprawdzają się idealnie zarówno do makijażu, jak i do golenia. Koniec z brakiem dobrej widoczności. Od teraz każdy makijaż będzie wykonany precyzyjnie, a golenie łatwiejsze niż kiedykolwiek wcześniej.

Lustra LED to doskonałe, energooszczędne rozwiązanie

Lampy ledowe cechują się długą żywotnością, są ekologiczne i przyjazne dla środowiska. Nie zawierają toksycznych materiałów i na pewno się nimi nie oparzysz – produkują dużo energii, ale mało ciepła.

Artforma jako producent luster z oświetleniem LED, gwarantuje, że oferowane produkty są wyjątkowo oszczędne. Żarówki LED wystarczają nawet na kilka lat i pobierają znacznie mniej prądu od zwykłych żarówek, dzięki czemu są znacznie tańsze w eksploatacji.

Lustro łazienkowe z oświetleniem LED to zupełnie nowa jakość porannej rutyny!

Szafki łazienkowe z lustrem

Gdy masz małą łazienkę, każda powierzchnia do przechowywania jest na wagę złota. W takich pomieszczeniach idealnie sprawdzają się szafki łazienkowe z lustrem!

Dwa w jednym – lustro łazienkowe i przechowywanie najpotrzebniejszych kosmetyków!

Podświetlane lustro LED zamontowane w szafce doskonale sprawdzi się przy codziennej pielęgnacji. Oświetlisz za jego pomocą twarz i wykonasz idealny makijaż. Szafki o długości 100 cm, wysokie na 72 cm pasują do większości łazienek i rozwiążą problem braku miejsca.

Lustra dekoracyjne

Lustra dekoracyjne to perfekcyjne uzupełnienie każdego wnętrza, zarówno salonu, sypialni, jak i łazienki. Ekskluzywne lustro doda Twojemu mieszkaniu subtelnej elegancji i powiększy je optycznie.

Lustra z podświetleniem LED są najlepszym wyborem z możliwych!

W naszej ofercie dostępne są lustra z ramami o rozmaitych motywach. Motyw geometryczny wpisze się w wystrój minimalistyczny, z kolei motyw dżungli doda każdej łazience wyjątkowego charakteru. Natomiast dekoracyjne kwiaty na ramie lustra ocieplą pomieszczenie i uczynią je bardziej kolorowym.

Akcesoria dodatkowe

Nowoczesne lustro LED może być wzbogacone o rozmaite akcesoria dodatkowe, które ułatwią i uprzyjemnią codzienne życie.

Lustro łazienkowe, które nie paruje? Tak, to w końcu jest możliwe! Producent luster łazienkowych powinien wychodzić naprzeciw potrzebom swoich klientów, dlatego zaprojektowaliśmy nasze lustra z myślą o tych, którzy mają dosyć słabej widoczności w lustrze i zaparowanej tafli.

Kontrola godziny i pogody ułatwią codzienne wyjścia z domu.

Lustro z zegarkiem sprawi, że uszykowanie się na wybraną godzinę stanie się łatwiejsze niż kiedykolwiek wcześniej.

Lusterko kosmetyczne jako dodatek do lustra, Bluetooth i zasilanie na baterie.

Ciesz się nieprzerwanie swoim inteligentnym lustrem LED!

Lustra na wymiar i kształt

Szukasz idealnego lustra do Twojego mieszkania? To doskonale, właśnie je znalazłeś! Skąd mamy tę pewność? Nasze lustra są na każdy wymiar i kształt.

Lustro z oświetleniem LED to oszczędność energii i pieniędzy, będzie Ci służyć wiele lat bez przerwy. Eleganckie, nowoczesne lustra dodadzą Twojemu wnętrzu charakteru, zdecydowanie warto na nie postawić!

Różnorodność rozmiarów dostępnych u nas luster to gwarancja, że znajdziesz lustro skrojone pod Twoje potrzeby! Dostępne są klasyczne rozmiary idealne do łazienki, do salonu, jak i te bardziej podłużne, do przedpokoju czy gdziekolwiek, gdzie chcesz przejrzeć się w całości. Wybieraj spośród rozmiarów: 80 x 60, 70 x 50, 90 x 60, 100 x 60, 100 x 80.

Kształty naszych luster mogą być dowolne, takie jak potrzebujesz! Okrągłe lub tylko delikatnie zaokrąglone tak, by nadążać za nowoczesnymi trendami i łagodzeniem ostrych krawędzi. Prostokątne lub kwadratowe dla tych, którzy kochają klasykę i elegancję.

Karuzela-produktowa/L112-MPL.jpg/613_540_crop.jpg?ts=1661413578&pn=home_square)

Karuzela-produktowa/Lustro_z-polkami_L02.jpg/613_540_crop.jpg?ts=1649734810&pn=home_square)

Karuzela-produktowa/Szafka-%C5%82azienkowa-Sofia%20.jpg/613_540_crop.jpg?ts=1646378921&pn=home_square)

Karuzela-produktowa/Paleta-kolory-polka.jpg)

Karuzela-produktowa/Paleta-kolory.jpg)